Django logging

Logging is one of the most useful

and also one of the most underused application management

practices. If you're still not using logging in your Django

projects or are using Python print() statements to

gain insight into what an application is doing, you're missing out

on a great deal of functionalities. Up next, you'll learn about Python

core logging concepts, how to set up Django custom logging and how

to use a monitoring service to track log messages.

Python core logging concepts

Django is built on top of Python's logging package. The Python logging package provides a robust and flexible way to setup application logging. In case you've never used Python's logging package, I'll provide a brief overview of its core concepts. There are four core concepts in Python logging:

- Loggers.- Provide the initial entry point to group log messages. Generally, each Python module (i.e. .py file) has a single logger to assign its log messages. However, it's also possible to define multiple loggers in the same module (e.g. one logger for business logic, another logger for database logic,etc). In addition, it's also possible to use the same logger across multiple Python modules or .py files.

- Handlers.- Are used to redirect log messages (created by loggers) to a destination. Destinations can include flat files, a server's console, an email or SMS messages, among other destinations. It's possible to use the same handler in multiple loggers, just as it's possible for a logger to use multiple handlers.

- Filters.- Offer a way to apply rules on log messages. For example, you can use a filter to send log messages generated by the same logger to different handlers.

- Formatters.- Are used to specify the final format for log messages.

With this brief overview of Python logging concepts, let's jump straight into exploring Django's default logging functionality.

Django default logging

The logging configuration for

Django projects is defined in the LOGGING variable in

settings.py. For the moment, don't even bother opening

your project's settings.py file because you won't see

LOGGING in it. This variable isn't hard-coded when you

create a project, but it does have some logging values in effect if

it isn't declared. Listing 5-15 shows the default

LOGGING values if it isn't declared in

settings.py.

Listing 5-15. Default LOGGING in Django projects

LOGGING = {

'version': 1,

'disable_existing_loggers': False,

'filters': {

'require_debug_false': {

'()': 'django.utils.log.RequireDebugFalse',

},

'require_debug_true': {

'()': 'django.utils.log.RequireDebugTrue',

},

},

'handlers': {

'console': {

'level': 'INFO',

'filters': ['require_debug_true'],

'class': 'logging.StreamHandler',

},

'null': {

'class': 'logging.NullHandler',

},

'mail_admins': {

'level': 'ERROR',

'filters': ['require_debug_false'],

'class': 'django.utils.log.AdminEmailHandler'

}

},

'loggers': {

'django': {

'handlers': ['console'],

},

'django.request': {

'handlers': ['mail_admins'],

'level': 'ERROR',

'propagate': False,

},

'django.security': {

'handlers': ['mail_admins'],

'level': 'ERROR',

'propagate': False,

},

'py.warnings': {

'handlers': ['console'],

},

}

}

In summary, the default Django logging settings illustrated in listing 5-15 have the following logging behaviors:

- Console logging or the

consolehandler is only done whenDEBUG=True, for log messages worse thanINFO(inclusive) and only for the Python packagedjango-- and its children (e.g.django.request,django.contrib) -- as well as the Python packagepy.warnings. - Admin logging or the

mail_adminshandler -- which sends emails toADMINS-- is only done whenDEBUG=False, for log messages worse thanERROR(inclusive) and only for the Python packagesdjango.requestanddjango.security.

Lets first break down the

handlers section in listing 5-15. Handlers define

locations to send log messages and there are three in listing 5-15:

console, null and

mail_admins. The handler names by themselves do

nothing -- they are simply reference names -- the relevant actions

are defined in the associated properties dictionary. All the

handlers have a class property which defines the

backing Python class that does the actual work.

The console handler

is assigned the logging.StreamHandler class which is

part of the core Python logging package. This class sends logging

output to streams such as standard input and standard error, and as

the handler name suggests, this is technically the system console

or screen where Django runs.

The null handler is

assigned the logging.NullHandler class which is also

part of the core Python logging package and which generates no

output.

The mail_admins

handler is assigned the

django.utils.log.AdminEmailHandler class, which is a

Django custom handler utility that sends logging output as an email

to people defined as ADMINS in

settings.py -- see the previous section on setting up

settings.py for the real world for more information on

the ADMINS variable.

Another property in handlers is

level which defines the threshold level at which the

handler must accept log messages. There are five threshold levels

for Python logging, from worst to least worst they are:

CRITICAL, ERROR, WARNING,

INFO and DEBUG. The INFO

level for the console handler indicates that all log

messages worse or equal to INFO -- which is every

level, except DEBUG -- should be processed by the

handler, a reasonable setting as the console can handle many

messages. The ERROR level for the

mail_admins handler indicates that only messages worse

or equal to ERROR -- which is just

CRITICAL -- should be processed by the handler, a

reasonable setting as only the two worst types of error messages

should trigger emails to administrators.

The other property in handlers is

filters which defines an additional layer to restrict

log messages for a handler. Handlers can accept multiple filters,

which is why the filters property accepts a Python

list. The console handler has a single filter

require_debug_true and the mail_admins

handler has a single filter require_debug_false.

Filters are defined in their own

block as you can observe in listing 5-15. The

require_debug_false filter is backed by the

django.utils.log.RequireDebugFalse class which checks

if a Django project has DEBUG=False, where as the

require_debug_true filter is backed by the

django.utils.log.RequireDebugTrue class which checks

if a project has DEBUG=True. This means the

console handler only accepts log messages if a Django

project has DEBUG=True and the

mail_admins handler only accepts log messages if a

Django project has DEBUG=False.

Now that you understand handlers

and filters, lets take a look at loggers section.

Logger definitions generally map directly to Python packages and

have parent-child relationships. For example, Python modules (i.e.

.py files) that belong to a package named

coffeehouse generally have a logger named

coffeehouse and Python modules that belong to the

package coffeehouse.about generally have a logger

named coffeehouse.about. The dot notation in logger

names also represents a parent-child relationship, so the

coffeehouse.about logger is considered the child of

the coffeehouse logger.

In listing 5-15 there are four

loggers: django, django.request,

django.security and py.warnings. The

django logger indicates that all log messages

associated with it and its children be processed by the

console handler.

The django.request

logger indicates that all log messages associated with it and its

children be processed by the mail_admins handler. The

django.request logger also has the

'level':'ERROR' property to provide the threshold

level at which the logger should accept log messages -- a property

that overrides the handler level property. And in

addition, the django.request logger also has the

'propagate':'False' statement to indicate the logger

should not propagate messages to parent loggers (e.g.

django is the parent of

django.request).

Next, we have the

django.security logger which is identical in

functionality to the django.request logger. And the

py.warnings which indicates that all log messages

associated with it and its children be processed by the

console handler.

Finally, there are the first two

lines in listing 5-15 which are associated with Python logging in

general. The version key identifies the configuration

version as 1, which at present is the only Python

logging version. And the disable_existing_loggers key

is used to disable all existing Python loggers. If

disable_existing_loggers is False it

keeps the pre-existing logger values and if it's set to

True it disables all pre-existing loggers values. Note

that even if you use 'disable_existing_loggers': False

in your own LOGGING variable you can redefine/override

some or all of the pre-existing logger values.

Now that you have a firm

understanding of what Django logging does in its default state,

I'll describe how to create log messages in a Django project and

then describe how to create custom LOGGING

configurations.

Create log messages

At the top of any Python module

or .py file you can create loggers by using the

getLogger method of the Python logging

package. The getLogger method receives the name of the

logger as its input parameter. Listing 5-16 illustrates the

creation of two logger instances using __name__ and

the hard-coded dba name.

Listing 5-16 Define loggers in a Python module

# Python logging package

import logging

# Standard instance of a logger with __name__

stdlogger = logging.getLogger(__name__)

# Custom instance logging with explicit name

dbalogger = logging.getLogger('dba')

The Python __name__

syntax used for getLogger in listing 5-16

automatically assigns the package name as the logger name. This

means that if the logger is defined in a module under the

application directory coffeehouse/about/views.py, the

logger receives the name coffeehouse.about.views. So

by relying on the __name__ syntax, loggers are

automatically created based on the origin of the log message.

Don't worry about having dozens

or hundreds of loggers in a Django project for each module or

.py file. As described in the past section, Python

logging works with inheritance, so you can define a single handler

for a parent logger (e.g. coffeehouse) that handles

all children loggers (e.g. coffeehouse.about,

coffeehouse.about.views,coffeehouse.drinks,

coffeehouse.drinks.models).

Sometimes it's convenient to

define a logger with an explicit name to classify certain types of

messages. In listing 5-16 you can see a logger named

dba that's used for messages related to databases

issues. This way database administrators can consult their own

logging stream without the need to see log messages from other

parts of the application.

Once you have loggers in a module

or .py file, you can define log messages with one of

several methods depending on the severity of a message that needs

to be reported. These methods are illustrated in the following

list:

<logger_name>.critical().- Most severe logging level. Use it to report potentially catastrophic application events (e.g. something that can halt or crash an application).<logger_name>.error().- Second most severe logging level. Use it to report important events (e.g. unexpected behaviors or conditions that cause end users to see an error).<logger_name>.warning().- Mid-level logging level. Use it to report relatively important events (e.g. unexpected behaviors or conditions that shouldn't happen, yet don't cause end users to notice the issue).<logger_name>.info().- Informative logging level. Use it to report informative events in an application (e.g. application milestones or user activity).<logger_name>.debug().- Debug logging level. Use it to report step by step logic that can be difficult to write (e.g. complex business logic or database queries).<logger_name>.log().- Use it to manually emit log messages with a specific log level.<logger_name>.exception().- Use it to create an error level logging message, wrapped with the current exception stack.

What methods you use to log messages across your project depends entirely up to you. As far as the logging levels are concerned, just try to be consistent with the selection criteria. You can always adjust the run-time logging level to deactivate log messages of a certain level.

In addition, I would also recommend you use the most descriptive log messages possible to maximize the benefits of logging. Listing 5-17 illustrates a series of examples using several logging methods and messages:

Listing 5-17 Define log messages in a Python module

# Python logging package

import logging

# Standard instance of a logger with __name__

stdlogger = logging.getLogger(__name__)

# Custom instance logging with explicit name

dbalogger = logging.getLogger('dba')

def index(request):

stdlogger.debug("Entering index method")

def contactform(request):

stdlogger.info("Call to contactform method")

try:

stdlogger.debug("Entering store_id conditional block")

# Logic to handle store_id

except Exception, e:

stdlogger.exception(e)

stdlogger.info("Starting search on DB")

try:

stdlogger.info("About to search db")

# Loging to search db

except Exception, e:

stdlogger.error("Error in searchdb method")

dbalogger.error("Error in searchdb method, stack %s" % (e))

As you can see in listing 5-17, there are various log messages of different levels using both loggers described in listing 5-16. The log messages are spread out depending on their level in either the method body or inside try/except blocks.

If you place the loggers and

logging statements like the ones in listing 5-17 in a Django

project, you'll see that logging wise nothing happens! In fact,

what you'll see in the console are messages in the form 'No

handlers could be found for logger

...<logger_name>'.

This is because by default Django

doesn't know anything about your loggers! It only knows about the

default loggers described in listing 5-15. In the next section,

I'll describe how to create a custom LOGGING

configuration so you can see your project log messages.

Custom logging

Since there are four different components you can mix and match in Django logging (i.e. loggers, handlers, filters and formatters), there is an almost endless amount of variations to create custom logging configurations.

In the following sections, I'll describe some of the most common custom logging configuration for Django projects, which include: overriding default Django logging behaviors (e.g. not sending emails), customizing the format of log messages and sending logging output to different loggers (e.g. files).

Listing 5-18 illustrates a custom

LOGGING configuration you would place in a project's

settings.py file, covering these common requirements.

The sections that follows explain each configuration option.

Listing 5-18. Custom LOGGING Django configuration

LOGGING = {

'version': 1,

'disable_existing_loggers': True,

'filters': {

'require_debug_false': {

'()': 'django.utils.log.RequireDebugFalse',

},

'require_debug_true': {

'()': 'django.utils.log.RequireDebugTrue',

},

},

'formatters': {

'simple': {

'format': '[%(asctime)s] %(levelname)s %(message)s',

'datefmt': '%Y-%m-%d %H:%M:%S'

},

'verbose': {

'format': '[%(asctime)s] %(levelname)s [%(name)s.%(funcName)s:%(lineno)d] %(message)s',

'datefmt': '%Y-%m-%d %H:%M:%S'

},

},

'handlers': {

'console': {

'level': 'DEBUG',

'filters': ['require_debug_true'],

'class': 'logging.StreamHandler',

'formatter': 'simple'

},

'development_logfile': {

'level': 'DEBUG',

'filters': ['require_debug_true'],

'class': 'logging.FileHandler',

'filename': '/tmp/django_dev.log',

'formatter': 'verbose'

},

'production_logfile': {

'level': 'ERROR',

'filters': ['require_debug_false'],

'class': 'logging.handlers.RotatingFileHandler',

'filename': '/var/log/django/django_production.log',

'maxBytes' : 1024*1024*100, # 100MB

'backupCount' : 5,

'formatter': 'simple'

},

'dba_logfile': {

'level': 'DEBUG',

'filters': ['require_debug_false','require_debug_true'],

'class': 'logging.handlers.WatchedFileHandler',

'filename': '/var/log/dba/django_dba.log',

'formatter': 'simple'

},

},

'root': {

'level': 'DEBUG',

'handlers': ['console'],

},

'loggers': {

'coffeehouse': {

'handlers': ['development_logfile','production_logfile'],

},

'dba': {

'handlers': ['dba_logfile'],

},

'django': {

'handlers': ['development_logfile','production_logfile'],

},

'py.warnings': {

'handlers': ['development_logfile'],

},

}

}

Caution When using logging files, ensure the destination folder exists (e.g. /var/log/dba/) and the owner of the Django process has file access permissions.

Disable default Django logging configuration

The

'disable_existing_loggers':True statement at the top

of listing 5-18 disables Django's default logging configuration

from listing 5-15. This guarantees no default logging behavior is

applied to a Django project.

An alternative to disabling

Django's default logging behavior is to override the default

logging definitions on an individual basis, as any explicit

LOGGING configuration in settings.py

takes precedence over Django defaults even when

'disable_existing_loggers':False. For example, to

apply a different behavior to the console logger (e.g.

output messages for debug level, instead of default

info level) you can define a handler in

settings.py for console with a

debug level -- as shown in listing 5-18.

However, if you want to ensure no

default logging configuration inadvertently ends up in a Django

project, you must set 'disable_existing_loggers' to

True. Because listing 5-18 sets

'disable_existing_loggers':True, notice the same

default filters from listing 5-15 are re-declared, since the

default filters are lost on account of

'disable_existing_loggers':True.

Logging formatters: Message output

By default, Django doesn't define

a logging formatters section as you can confirm in

listing 5-15. However, listing 5-18 declares a

formatters section to generate log messages with

either a simpler or more verbose output.

By default, all Python log

messages follow the format

%(levelname)s:%(name)s:%(message)s, which means

"Output the log message level, followed by the name of the logger

and the log message itself".

However, there is a lot more

information available through Python logging that can make log

messages more comprehensive. As you can see in listing 5-18, the

simple and verbose formatters use a

special syntax and a series of fields that are different from the

default. Table 5-2 illustrates the different Python formatter

fields including their syntax and meaning.

Table 5-2. Python logging formatter fields

| Field syntax | Description |

|---|---|

| %(name)s | Name of the logger (logging channel) |

| %(levelno)s | Numeric logging level for the message (DEBUG, INFO,WARNING, ERROR, CRITICAL) |

| %(levelname)s | Text logging level for the message ("DEBUG", "INFO","WARNING", "ERROR", "CRITICAL") |

| %(pathname)s | Full pathname of the source file where the logging call was issued (if available) |

| %(filename)s | Filename portion of pathname |

| %(module)s | Module (name portion of filename) |

| %(lineno)d | Source line number where the logging call was issued (if available) |

| %(funcName)s | Function name |

| %(created)f | Time when the log record was created (time.time() return value) |

| %(asctime)s | Textual time when the log record was created |

| %(msecs)d | Millisecond portion of the creation time |

| %(relativeCreated)d | Time in milliseconds when the log record was created,relative to the time the logging module was loaded (typically at application startup time) |

| %(thread)d | Thread ID (if available) |

| %(threadName)s | Thread name (if available) |

| %(process)d | Process ID (if available) |

| %(message)s | The result of record.getMessage(), computed just as the record is emitted |

You can add or remove fields to

the format field for each formatter based

on the fields in table 5-2. Besides the format field

for each formatter, there's also a

datefmt field that allows you to customize the output

of the %(asctime)s format field in

formatter(e.g. with the datefmt field set

to %Y-%m-%d %H:%M:%S, if a logging message occurs on

midnight new year's 2018, %(asctime) outputs

2018-01-01 00:00:00).

Note The syntax for the datefmt field

follows Python's strftime() format[2].

Logging handlers: Locations, classes, filters and logging

thresholds

The first handler in listing 5-18

is the console handler which provides custom behavior

over the the default console handler in listing 5-15. The

console handler in listing 5-18 raises the log level

to the DEBUG level to process all log messages

irrespective of their level. In addition, the console

handler uses the custom simple formatter -- logging formatter syntax is described

in the past section -- and uses the same default

console filters and class which tells Django to

process log messages when DEBUG=True (i.e.

'filters': ['require_debug_true']) and send logging

output to a stream (i.e. 'class':

'logging.StreamHandler').

In listing 5-18, you can also see

there are three different class values for each of the

remaining handlers: logging.FileHandler which sends

log messages to a standard file,

logging.handlers.RotatingFileHandler which sends log

messages to files that change based on a given threshold size and

logging.handlers.WatchedFileHandler which sends log

messages to a file that's managed by a third party utility (e.g.

logrotate).

The

development_logfile handler is configured to work for

log messages worse than DEBUG (inclusive) -- which is

technically all log messages -- and only when

DEBUG=True due to the require_debug_true

filter. In addition, the development_logfile handler

is set to use the custom verbose formatter and send

output to the /tmp/django_dev.log file.

The

production_logfile handler is configured to work for

log messages worse than ERROR (inclusive) -- which is

just ERROR and CRITICAL log messages --

and only when DEBUG=False due to the

require_debug_false filter. In addition, the handler

uses the custom simple formatter and is set to send

output to the file /var/log/django_production.log. The

log file is rotated every time a log file reaches 100 MB (i.e.

maxBytes) and old log files are backed up to

backupCount by appending a number (e.g.

django_production.log.1,django_production.log.2)

The dba_logfile is

configured to work for log messages worse than DEBUG

(inclusive) -- which is technically all log messages -- and when

DEBUG=True or DEBUG=False due to the

require_debug_true and

require_debug_false filters. In addition, the handler

uses the custom simple formatter and is set to send

output to the file /var/log/django_dba.log.

The dba_logfile

handler is managed by the WatchedFileHandler class,

which has a little more functionality than the basic

FileHandler class used by the

development_logfile handler. The

WatchedFileHandler class is designed to check if a

file changes, if it changes a file is reopened, this in turn allows

a log file the be managed/changed by a Linux log utility like

logrotate. The benefit of a log utility like logrotate is that it

allows Django to use more elaborate log file features (e.g.

compression, date rotation). Note that if you don't use a third

party utility like logrotate to manage a logfile that uses

WatchedFileHandler, a log file grows indefinitely.

Caution/Tip The RotatingFileHandler logging handler class in listing 5-18 is not safe for multi-process applications. Use the ConcurrentLogHandler logging handler class[3] to run on multi-process applications.

Tip The core Python logging package includes many other logging handler classes to process messages with things like Unix syslog, Email (SMTP) & HTTP[4]

Logging loggers: Python packages to use logging

The loggers section

in listing 5-18 defines the handlers to attach to Python packages

-- technically the attachment is done to logger names, but I used

this term since loggers are generally named after Python packages.

I'll provide an exception to this 'Python package=logger name'

shortly so you can gain a better understanding of this concept.

The first logger

coffeehouse tells Django to attach all the log

messages for itself and its children (e.g.

coffeehouse.about,

coffeehouse.about.views and

coffeehouse.drinks) to the

development_logfile and

production_logfile handlers. By assigning two

handlers, log messages from the coffeehouse logger

(and its children) are sent to two places.

Recall that by using Python's

__name__ syntax to define loggers -- see listing 5-16

& listing 5-17 -- the name of the loggers end up being based on the

Python package structure.

Next, you can see the

dba logger links all its log messages to the

dba_logfile handler. In this case, it's an exception

to the rule that loggers are named after Python packages. As you

can see in listing 5-17, a logger can be purposely named

dba and forgo using __name__ or another

convention related to Python packages.

Next in listing 5-18, the django and

py.warnings loggers are re-declared to obtain some of

Django's default behavior, given listing 5-18 uses

'disable_existing_loggers': True. The

django logger links all its log messages to the

development_logfile and

production_logfile handlers, since we want log

messages associated with the django package/logger and

its children (e.g. django.request,

django.security) to go to two log files.

Notice listing 5-18 doesn't

declare explicit loggers for django.request and

django.security unlike the Django default's in listing

5-15. Because the django logger automatically handles

its children and we don't need different handlers for each logger

-- like the default logging behavior-- listing 5-18 just declares

the django logger.

At the end of the listing 5-18,

the py.warnings logger links all its log messages to

the development_logfile handler, to avoid any trace of

py.warnings log messages in production logs.

Finally, there's the

root key in listing 5-18, which although declared

outside of the loggers section, is actually the

root logger for all loggers. The root key

tells Django to process messages from all loggers -- whether

declared or undeclared in the configuration -- and handle them in a

given in way. In this case, root tells Django that all

log messages -- since the DEBUG level includes all

messages -- generated by any logger (coffeehouse, dba,

django, py.warnings or any other) be processed by the

console handler.

Disable email to ADMINS on errors

You may be surprised listing 5-18 makes no use of the mail_admins handler defined by default in listing 5-15. As I mentioned in the previous section on

Django default logging, the mail_admins handler sends

an email error notification for log messages generated by

django.request or django.security

packages/loggers.

While this can seem like an

amazing feature at first -- avoiding the hassle of looking through

log files -- once a project starts to grow it can become extremely

inconvenient. The problem with the default logging email error

notification mechanism or mail_admins handler is it

sends out an email every single time an error associated

with django.request or django.security

packages/loggers is triggered.

If you have 100 visitors per hour

on a Django site and all of them hit the same error, it means 100

email notifications are sent in the same hour. If you have three

people in ADMINS, then it means at least 300 email

notifications per hour. All this can add up quickly, so a couple of

different errors and a few thousand visitors a day can lead to

email overload. So as convenient as it can appear to get email log

error notifications, you should turn this feature off.

I recommend you stick to the old method on inspecting log files on a constant basis or if you require the same real-time log error notifications provided by email, that you instead use a dedicated reporting system such as Sentry which is described in the next section.

Logging with Sentry

As powerful a discovery mechanism as logging is, inspecting and making sense of log messages can be an arduous task. Django and Python projects are no different in this area. As it was shown in the previous section, relying on core logging packages still results in log messages being sent to either the application console or files, where making sense of log messages (e.g. the most relevant or common log message) can lead to hours of analysis.

Enter Sentry, a reporting and aggregation application. Sentry facilitates the inspection of log messages through a web based interface, where you can quickly determine the most relevant and common log messages.

To use Sentry you need to follow two steps: Set up Sentry to receive your project log messages and set up your Django project to send log messages to Sentry.

Although there are alternatives that offer similar log monitoring functionalities as Sentry (e.g. OverOps, Airbrake, Raygun), what sets Sentry apart is that it's built as a Django application!

Even though Sentry has evolved considerably to the point it's now a very complex Django application. The fact that Sentry is an open-source project based on Django, makes it an almost natural option to monitor Django projects, since you can install and extend it using your Django knowledge -- albeit there are software-as-service Sentry alternatives.

Set up Sentry the application

You can set up Sentry in two ways: Install it yourself or use a software-as-service Sentry provider.

Sentry is a Django open source project, so the full source code is freely available to anyone[5]. But before you go straight to download Sentry and proceed with the installation[6], beware Sentry has grown considerably from its Django roots. Sentry now requires a Docker environment, the relational Postgres database and the NoSQL Redis database. Unfortunately, Sentry evolved to support a wide variety of programming languages and platforms, to the point it grew in complexity and is no longer a simple Django app installation. So if you install Sentry from scratch, expect to invest a couple of hours setting it up.

Tip Earlier Sentry releases (e.g. v. 5.0[7]) don't have such strict dependencies and can run like basic Django applications (e.g. any Django relational database, no Docker, no NoSQL database). They represent a good option for simple Sentry installations, albeit they require dated Django versions (e.g v. Django 1.4).

The Sentry creators and maintainers run the software-as-a-service: https://sentry.io. The Sentry software-as-a-service offers three different plans: a hobbyist plan that's free for up to 10,000 events per month and is designed for one user; a professional plan that's $12 (USD) a month, which starts at 50,000 events per month and is designed for unlimited users; and an enterprise plan with custom pricing for millions of events and unlimited users.

Since you can set up Sentry for free in a few minutes with just your email -- and no credit card -- the Sentry software-as-a-service from https://sentry.io is a good option to try out Sentry. And even after trying it out, at a cost of $12 (USD) per month for 50,000 events and $0.00034 per additional event, it represents a good value -- considering an application that generates 50,000 events per month should have a considerable audience to justify the price.

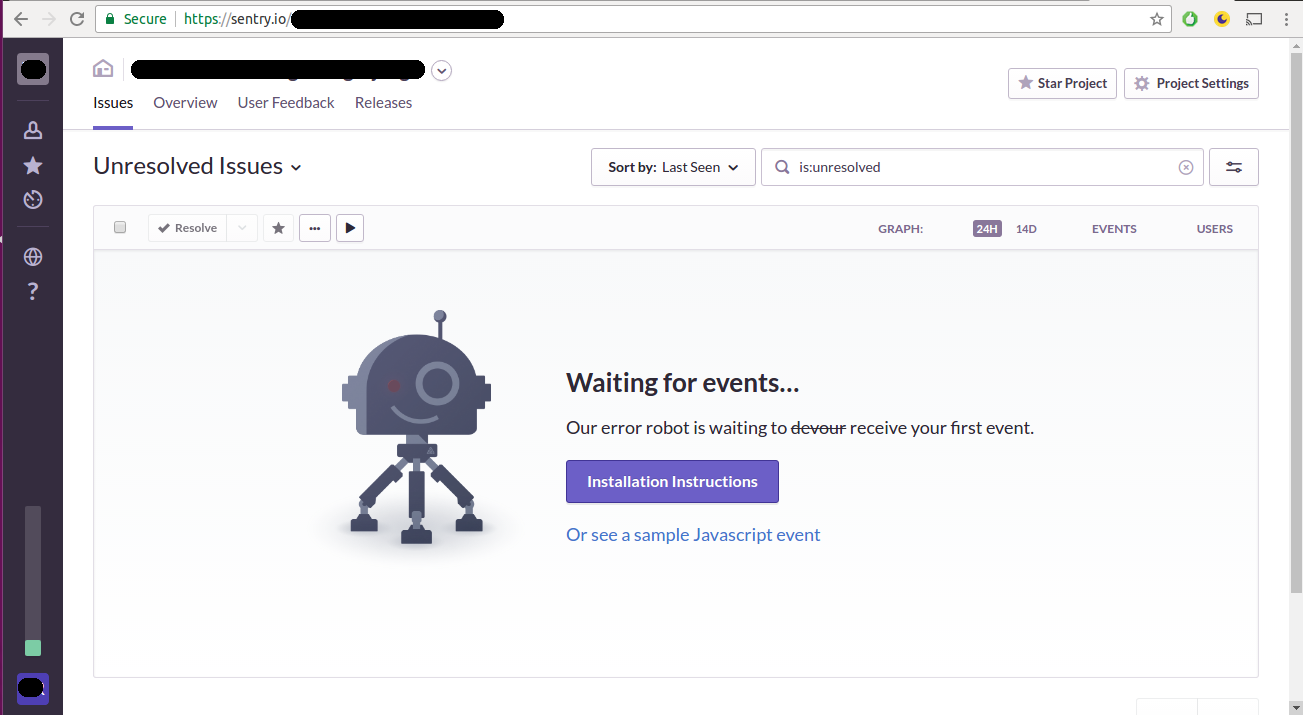

Once you create a sentry.io account you'll enter into the main dashboard. Create a new Django project. Take note of the client key or DSN which is a long url that contains the @sentry.io snippet in it -- this is required to configure Django projects to send log messages to this particular Sentry Django project. If you missed the project client key or DSN, click on the top right 'Project Settings' button illustrated in figure 5-1, and select the bottom left option 'Client Keys (DSN)' to consult the value.

Figure 5-1 Sentry SaaS project dashboard

As you can see in figure 5-1, the Sentry SaaS project dashboard acts as a central repository to consult all project logging activity. In figure 5-1 you can also see the various action buttons, that allow log message to be sorted, searched, charted, as well as managed by different users. All of this creates a very efficient environment in which to analyze any Django logging activity in real time.

Once you have Sentry setup, you can configure a Django project to send log messages to a Django project.

Set up a Django application to use Sentry

To use Sentry in a Django project

you require a package called Raven to establish communication

between the two parties. Simply do pip install raven

to install the latest Raven version.

Once you install Raven, you must

declare it in the INSTALLED_APPS list of your

project's settings.py file as illustrated in listing

5-19. In addition, it's also necessary to configure Raven to

communicate with a specific Sentry project via a DSN value, through

the RAVEN_CONFIG variable also shown in listing

5-19.

Listing 5-19 Django project configuration to communicate with Sentry via Raven

INSTALLED_APPS = [

...

'raven.contrib.django.raven_compat',

...

]

RAVEN_CONFIG = {

'dsn': '<your_dsn_value>@sentry.io/<your_dsn_value>',

}

As you can see in listing 5-19,

the RAVEN_CONFIG variable should declare a

dsn key with a value corresponding to the DSN value

from the Sentry project that's to receive the log messages.

After you set up this minimum

Raven configuration, you can send a test message running the

python manage.py raven test command from a Django

project's command line. If the test is successful, you will see a

test message in the Sentry dashboard presented in figure 5-1.

Once you confirm communication

between your Django project and Sentry is successful, you can set

up Django logging to send log messages to Sentry. To Django's

logging mechanism, Sentry is seen as any other handler (e.g. file,

stream), so you must first declare Sentry as a logging handler

using the raven.contrib.django.handlers.SentryHandler

class, as illustrated in listing 5-20.

Listing 5-20 Django logging handler for Sentry/Raven

LOGGING = {

...

'handlers': {

....

'sentry': {

'level': 'ERROR',

'class': 'raven.contrib.django.handlers.SentryHandler',

},

...

}

The sentry handler

in listing 5-20 tells Django to handle log messages with an

ERROR level through Sentry. Once you have a Sentry

handler, the last step is to use the sentry handler on

loggers to assign which packages/loggers get processed through

Sentry (e.g. django.request or root

logger, as described in the earlier 'Logging loggers' section).